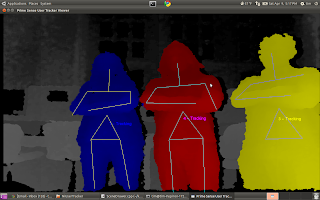

How does that work? Well, when we started, we had code that determined where the exact center pixel of the Kinect's field of vision was, and then returned the z-value of that pixel. This data was then processed on the BeagleBoard, and converted in to Dynamism commands, which were then transmitted to the robot via Ethernet. With this code, we were able to have the robot follow a person as they traveled in a straight line, and stayed in the center of the Kinect's vision. This was no small undertaking, but it wasn't very exciting. So, we decided to add turning.

To do this, we broke the screen in to a grid. We then checked z-data in each block of the grid. The z-value that was smallest represented the closest object to the robot. Since we were operating in an obstacle-free environment, this closest object would be the person we were tracking. Based upon which grid had the smallest z-value, we were able to tell where the person was relative to the robot. The robot was then told to turn towards that person, and continue to follow them, both backwards and forwards. The robot remembers the direction that the person is moving in. So, if you are in the left part of the robot's field of vision, and you move to its right field of vision, and then out of its field of vision, the robot figures that you have moved to its right, and thus continues to move right until it finds you again.

With this code, we have been able to implement the following behaviors:

- Straight lines forwards

- Follow a person as they walk straight away from a person

- Straight lines backwards

- The robot walks backwards as they walk towards it

- Forward turning

- Follow the person as they walk away and turn to the left or right

- Backward turning

- As the person walks toward the robot, they turn, and the robot walks backwards, turning in the opposite direction so that they keep the person in the center of their field of vision

- Three-Point turning

- You remember the K-Turn from driver's ed, right? This behavior is a good way to turn the robot around

- Serpentine/slalom

- The robot tracks a person as they travel in an S path

- Circle in place

- The person maintains a fixed distance from the robot, and walks around them in a circle. The robot circles in place so that it continues to face the person.

- Target Switching

- Two people walk next to each other, one peels off, and the robot tracks the other.

We've got some great footage that we're going to edit together after finals, but here is a quick video just for LOLzzz.