First, we had to make sure we understood the OpenNI code, which, is no small feat since the code is not commented, and there is no documentation for it. Using the tried and true method of commenting out code, re-making everything, and seeing what happens, we were able to figure out how the code works.

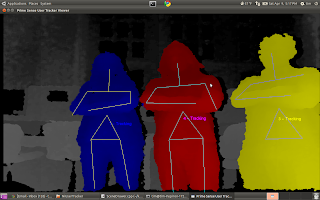

What was most helpful was commenting out the various functions that that actually draw the skeleton, and then seeing how the image changed. One image from this process is shown below. Notice how the torsos have only triangles, and not X's. This is because we commented out the code that drew lines from the shoulder to the geometric center of mass (GCOM).

We came to find that the variable XN_SKEL_TORSO is used to store the position data for the geometric center of mass. We then commented out all the drawing code, and added in this line:

DrawLimb(aUsers[i], XN_SKEL_TORSO, XN_SKEL_HEAD);

Thus, from here on out, instead of drawing a skeleton, we will draw a single line from the head to the center of the torso.

Now, we knew how the code draws a line from the head to the GCOM, but we didn't know how the code found where the head and GCOM were located. Further perusal of the code yielded the following:

XnSkeletonJointPosition joint1, joint2; //skeleton joint position objects

g_UserGenerator.GetSkeletonCap().GetSkeletonJointPosition(player, eJoint1, joint1); //reads position of player's first joint (eJoint1), store as joint1

g_UserGenerator.GetSkeletonCap().GetSkeletonJointPosition(player, eJoint2, joint2); //reads position of player's second joint (eJoint2), store as joint2

This code reads in the position of various joints, and then stores their location to the variable joint1,2, etc.

The below code takes the data from joint1, etc, and breaks it into x and y components that are stored in x and y arrays.

XnPoint3D pt[2];

pt[0] = joint1.position;

pt[1] = joint2.position;

g_DepthGenerator.ConvertRealWorldToProjective(2, pt, pt);

glVertex3i(pt[0].X, pt[0].Y, 0);

glVertex3i(pt[1].X, pt[1].Y, 0);

Thus, we added the following code:

if(eJoint1 == XN_SKEL_TORSO){

printf("Torso Position in XY: %f,%f \n",pt[0].X,pt[0].Y);

}

if(eJoint2 == XN_SKEL_TORSO){

printf("Torso Position in XY: %f,%f \n",pt[1].X,pt[1].Y);

}

This code waits until it sees data for the GCOM, and then prints the appropriate x and y coordinates to the terminal. Thus, we have found out how to track the x and y coordinates of the GCOM of a human being!

Some results are below:

So, we're 2/3 of the way there. All that's left to accomplish our goal is to figure out how to pull the z data for the GCOM.

And we're close. So far, we have working code for finding the z component of the exact center of the Kinect's view range. Now, the only necessary step is instead of having that code find the z data for a fixed point, we need to figure out how to feed it the x and y data for the GCOM we are already pulling, and have it calculate the z position for the point at that x and y intersection. Its late, and we're hitting a bit of the wall, but we are confident that after a bit of sleep, we can figure it out.

All in all it was a good day, and we're happy with the results we got. We are still on track with our goals.

No comments:

Post a Comment